How to Measure CSAT Without Surveys: A New Way to Measure User Experience

How to Measure CSAT Without Surveys: A New Way to Measure User Experience

Quick: what’s the best way to get feedback on how well your conversational bot is meeting customer expectations? I bet the first thing that came to mind was: “A survey!”

User surveys may be one of the most common techniques for measuring UX and customer satisfaction. But do surveys actually give you the insights you need on your user experience, and are there better options?

Let’s look at the nature of the data surveys deliver—and explore some alternative approaches to measuring customer satisfaction (without surveys).

The business impact of increasing CSAT—and the case for measuring it more effectively

High customer satisfaction (CSAT) delivers benefits far beyond warm, fuzzy feelings. Satisfied customers are the lifeblood of thriving businesses. They are more likely to return and make repeat purchases, driving up revenue and your company’s overall value. Happy customers also become brand ambassadors, recommending your products or services through word-of-mouth and positive reviews. In the competitive digital landscape, this free and powerful marketing is invaluable.

But there’s a flip side. Low CSAT leads to customer churn, negative reviews, and a damaged reputation that’s difficult to repair. Understanding how satisfied your customers are is the first step to improving the situation and unlocking the business benefits mentioned above. This makes CSAT a key metric, which is why effective measurement techniques are so important.

It’s all the more important when zeroing in on chatbot performance. Chatbots are often the first line of interaction customers have with your brand. A well-functioning bot can elevate the customer experience, driving satisfaction. On the other hand, a clunky chatbot that misunderstands requests or can’t resolve issues quickly leads to frustration. This negative experience can damage brand perception and harm containment rates, increasing costly escalations to human agents. Getting timely, accurate CSAT data on your chatbot is critical for continuously improving your customer-facing AI and ultimately achieving a competitive edge.

Rethinking the CSAT survey for measuring bot experience

The typical source of information on bot experience comes from the traditional exit survey. That is, a quick survey launched post-conversation, before the customer jumps off the conversation to another task.

Surveys are so popular for measuring CSAT because they can easily be tacked onto the end of a conversation interaction. To help, there’s a wide range of tools available that offer customization for branding, questions, notification workflows, reporting, and so on. They seem like an easy and great way for a business or service team to generate feedback.

Surveys have been part of the digital landscape for so long that their actual value often goes unquestioned. However, there are challenges with relying on a survey-based approach, when measuring how your customers feel about their digital interactions. These include:

- Most people skip them, delivering low returns, often as little as 5%

- Binary options like ‘thumbs up or down’ give minimal insight.

- Star ratings, typically 1-5, still provide no detail.

- Text response buttons can only highlight specific issues.

- Freeform text responses can be hard to analyze in volume.

Whatever the response type, surveys can help you generate some primitive feedback numbers or insights. But they provide next-to-no color on how your customers actually feel about their user experience.

Beyond these basic numbers, these types of results include:

- Subjective responses, where terms like “poor,” “okay,” “satisfied,” “good,” and “highly satisfied” differ from customer to customer.

- Skewed toward complaints, for each customer that complains, around 26 never respond, according to research.

- Cultural bias, people have their own definitions when it comes to survey language, and regional differences can vary hugely.

- Short-termism, a CSAT score lacks the impact of other survey tools. And customers increasingly feel surveys are non-engaging or valuable.

Alternative Techniques to Measuring CSAT

Particularly as conversational bots continue to grow in popularity as a means of automating and customer service, relying on traditional surveys is a flawed approach to understanding the thousands or even millions of interactions your organization and its bots have with customers.

Here are some more effective techniques for measuring customer satisfaction, including bot experience quality, without surveys:

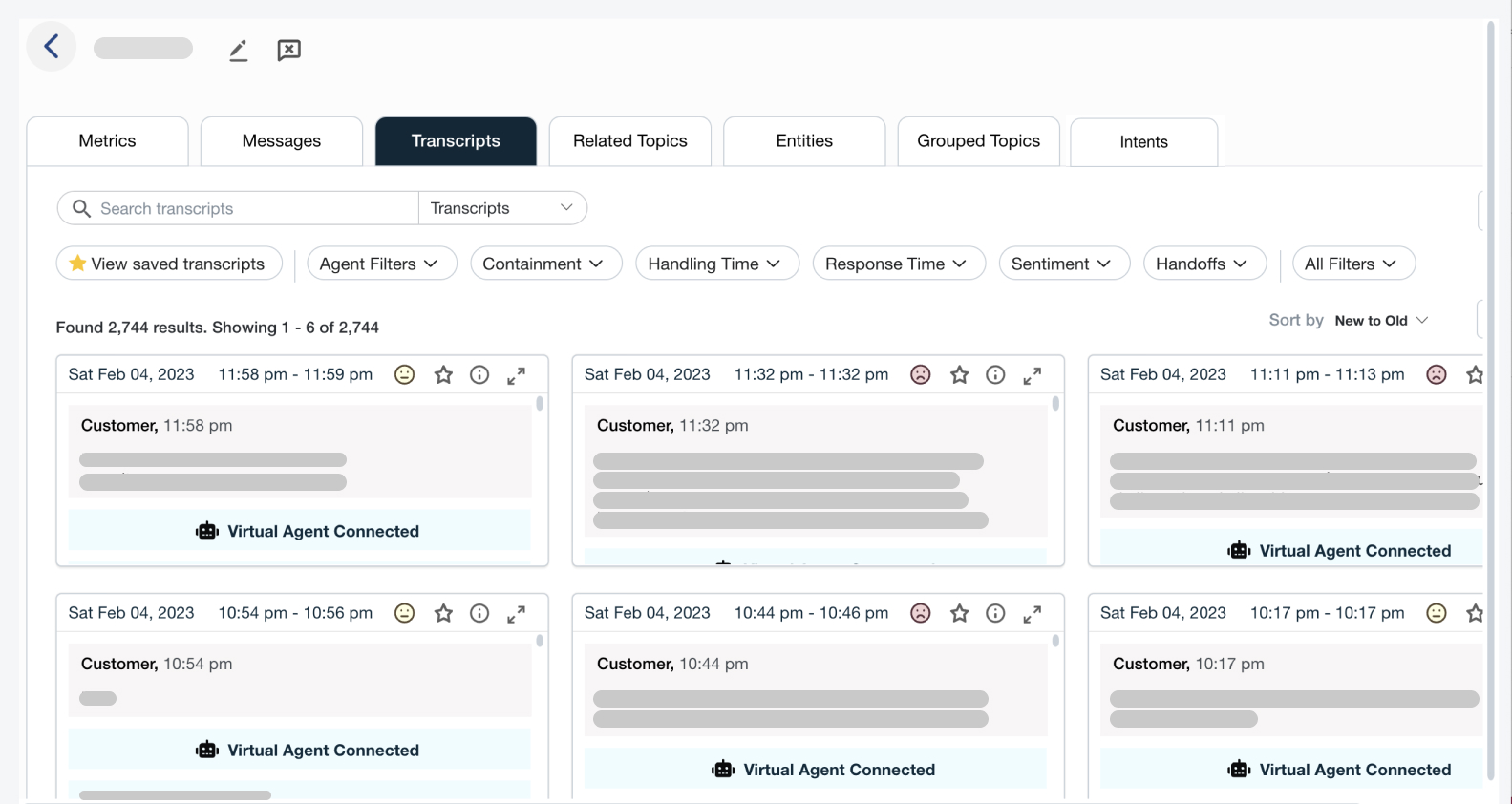

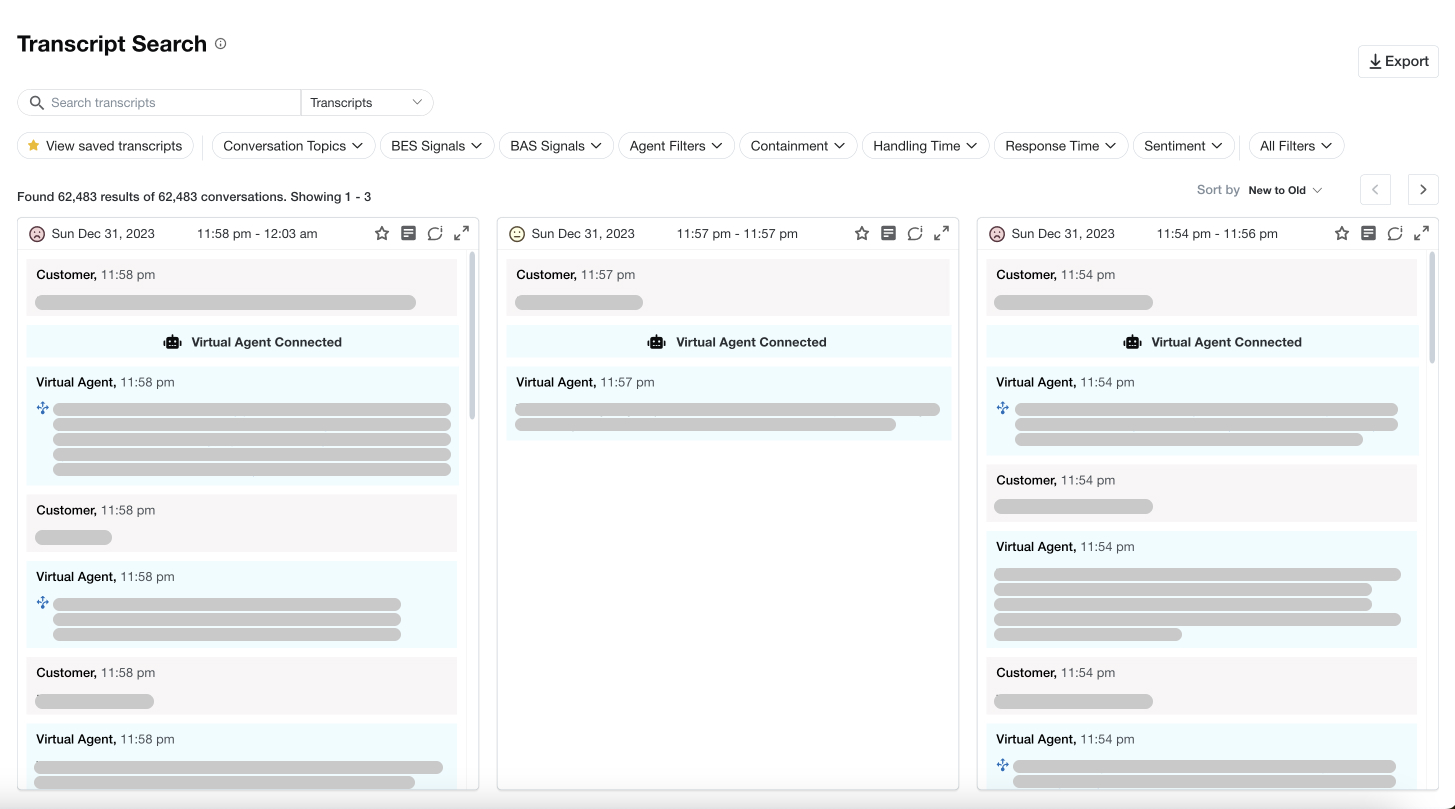

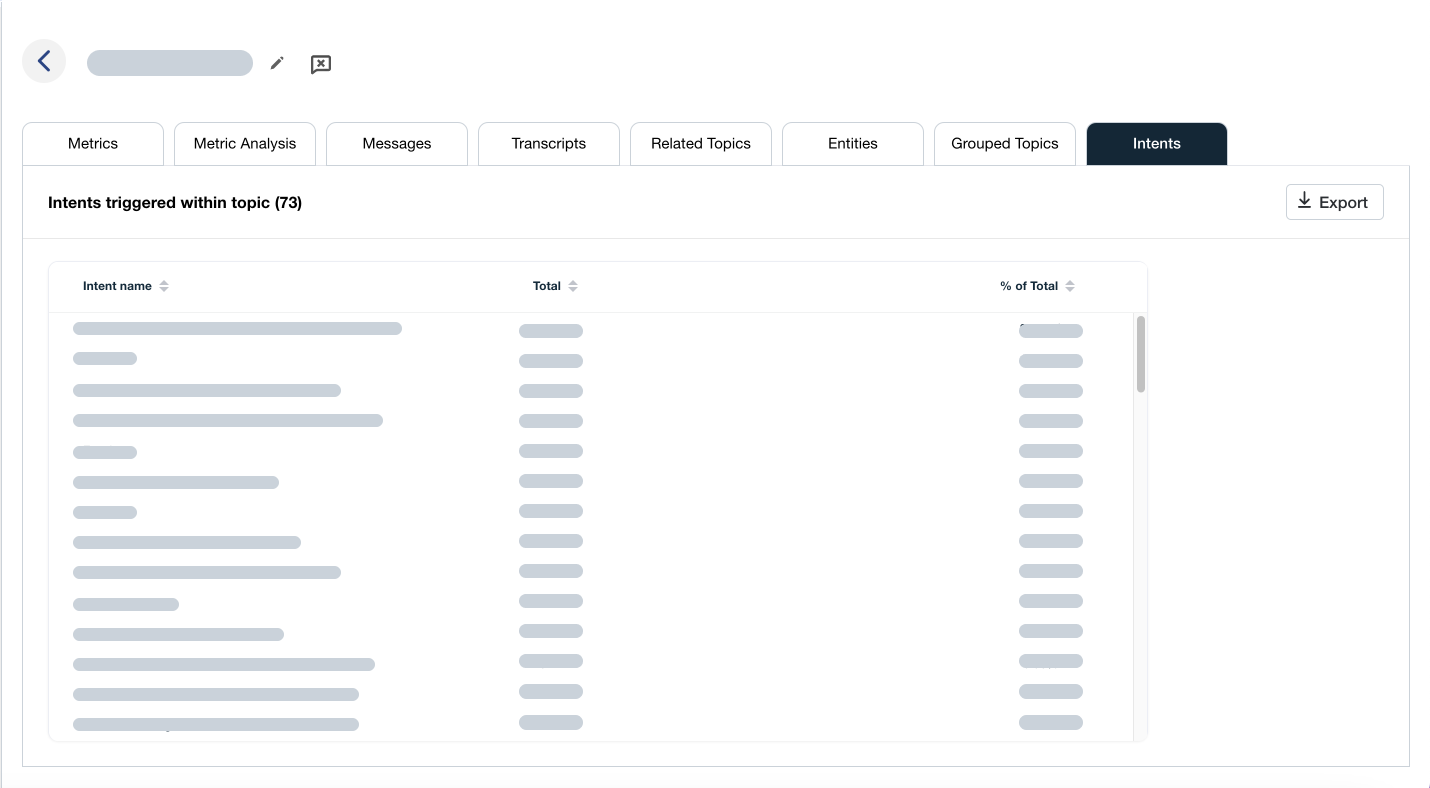

- Analyze Chat Transcripts: Dig into the wealth of information stored within the conversational data of chatbot interactions. Advanced analytics tools, especially those powered by Natural Language Processing (NLP), can identify sentiment, keywords, and patterns within the transcripts that reveal customer satisfaction while streamlining the analysis process.

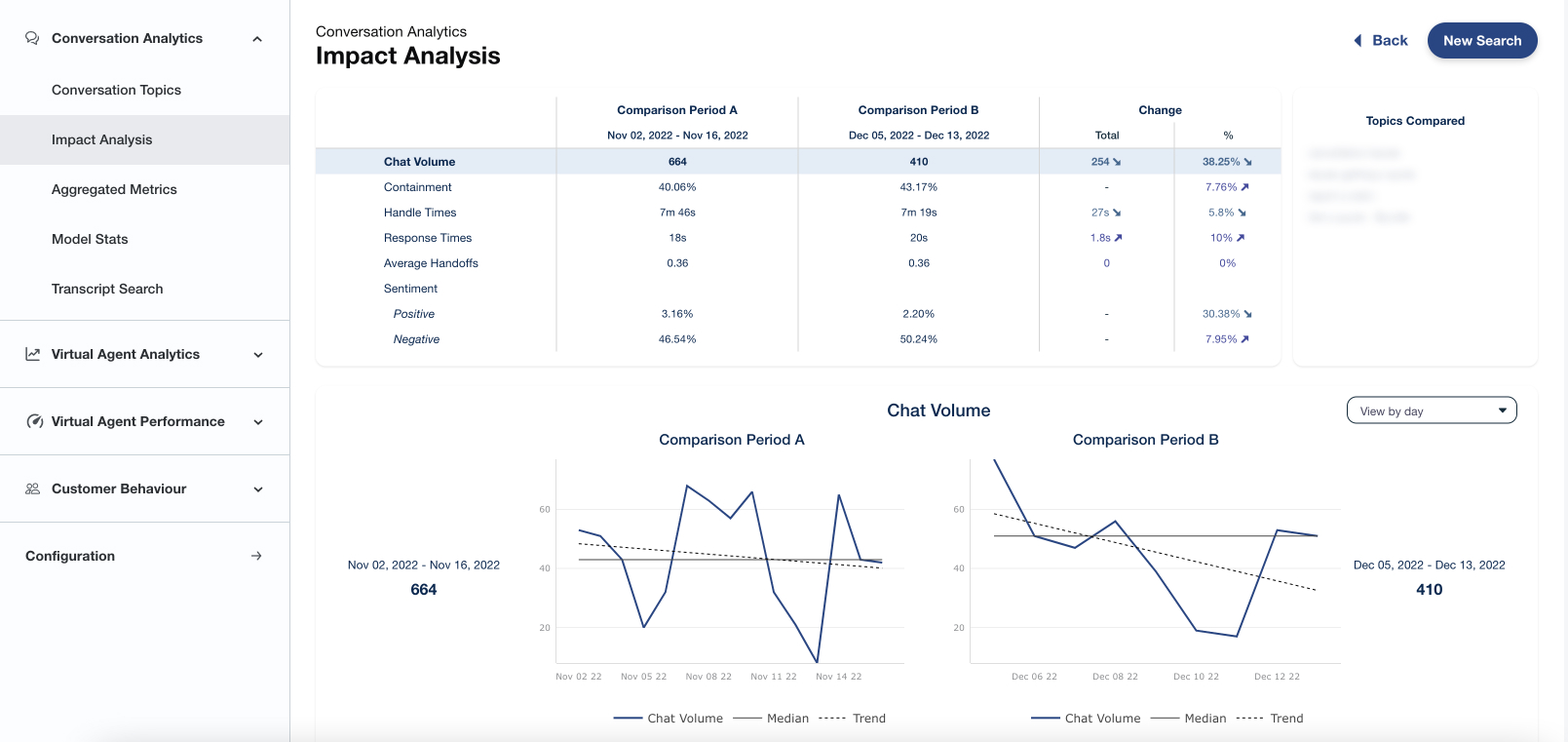

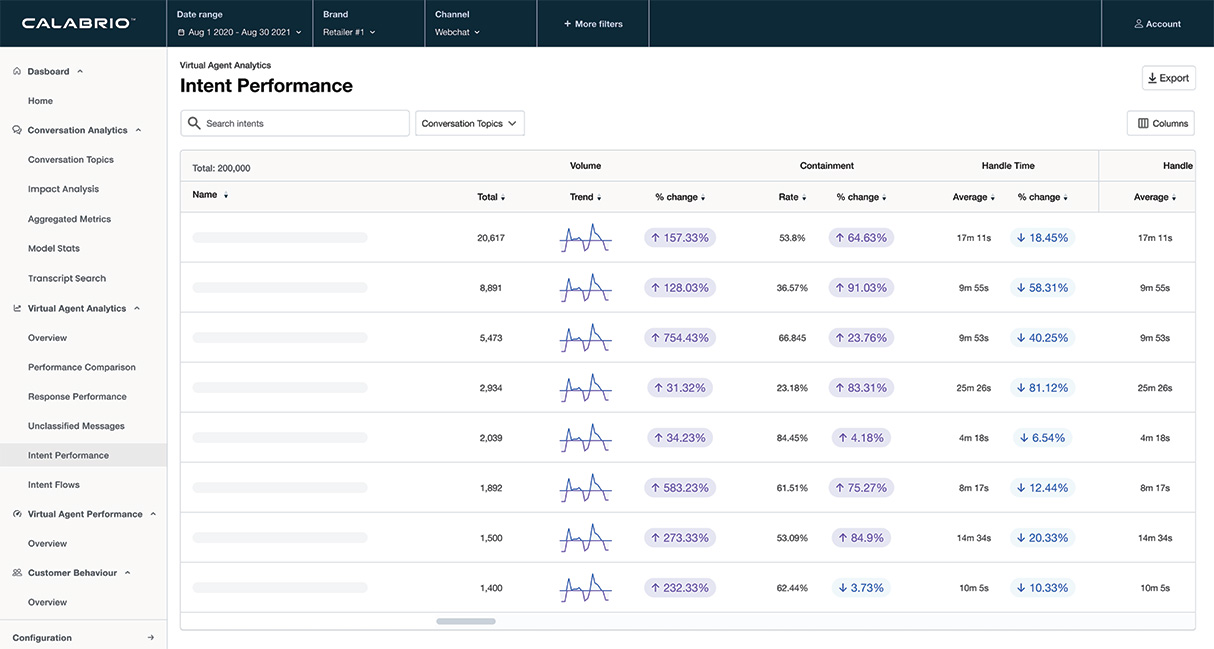

- Monitor Customer Behavior: Track how users interact with your bot. Metrics like session length, goal completion rates, and where users drop out of conversations can provide valuable clues about their level of satisfaction. Purpose-built bot analytics offerings can go even deeper, accelerating bot management tasks like intent discovery.

- Sentiment Analysis: Use sentiment analysis tools to detect customer emotions within support tickets, social media mentions, or chatbot conversations. This can give you real-time insight into positive, negative, or just plain neutral feelings about your offerings.

- Track Bot Escalations: A high number of customers requesting to speak to a live agent suggests issues within the bot experience. Monitoring these escalations can help identify specific areas where the bot’s functionality is negatively impacting CSAT.

An AI-first Approach to Measuring Bot Experience

In the era of big data and AI, conversational analytics software is a powerful tool that can be used to automatically find signals in every conversation, without having to rely on surveys.

For example, AI-driven chatbot analytics tools and call recording software can combine to help your team understand things such as:

- Did the customer repeat their question more than once? Multiple repetitions signal the bot isn’t understanding, leading to frustration.

- Did the bot produce the same response multiple times? This indicates rigidity and an inability to address individual needs, leading to a poor experience.

- Did the customer paraphrase their request, using different words to solicit a different answer? Rephrasing may mean trying to ‘trick’ the bot into a helpful answer, reflecting dissatisfaction.

- Did the request escalate to profanity, or were there other signs of frustration? These are clear red flags of a negative customer experience.

- Were there multiple requests to escalate that stayed contained within the bot, but didn’t actually resolve the issue? This hints at issues but may not result in a traditional escalation—a blind spot surveys typically miss.

- Did the customer leave the conversion before hitting the endpoint? Abrupt abandonment suggests the bot failed to help.

- Was there any explicit feedback from the customer about their negative experience? Direct criticism is invaluable, even if it’s unpleasant to read.

By analyzing these conversational cues with the help of AI, you can uncover hidden insights about your chatbot’s performance and potential pain points that impact CSAT.

This granular, proactive data empowers you to make informed improvements, optimizing your bot for better customer experiences and higher satisfaction levels—without the need for a single survey.

Dig into the granular bot experience data you need to deliver improved experiences with Calabrio Bot Analytics. Book a demo today to see how Calabrio Bot Analytics can help you supercharge your chatbot and voicebot performance.